linux-tracing-workshop-part 1

记录linux-tracing-workshop实验过程,第一部分共七篇。

- 1. Probing Tracepoints with ftrace

- 2. CPU Sampling with perf and Flame Graphs

- 3. Using BPF Tools: Broken File Opens

- 4. Using BPF Tools: Slow File I/O

- 5. Using BPF Tools: Chasing a Memory Leak

- 6. Using BPF Tools: Database and Disk Stats and Stacks

- 7. Using BPF Tools: Node and JVM USDT Probes

1. Probing Tracepoints with ftrace

开启sched:sched_switch tracepoint进行线程切换跟踪

root@ubuntu1604:~# cd /sys/kernel/debug/tracing/

root@ubuntu1604:/sys/kernel/debug/tracing# echo 1 > tracing_on

root@ubuntu1604:/sys/kernel/debug/tracing# cat events/sched/sched_switch/format

name: sched_switch

ID: 273

format:

field:unsigned short common_type; offset:0; size:2; signed:0;

field:unsigned char common_flags; offset:2; size:1; signed:0;

field:unsigned char common_preempt_count; offset:3; size:1; signed:0;

field:int common_pid; offset:4; size:4; signed:1;

field:char prev_comm[16]; offset:8; size:16; signed:1;

field:pid_t prev_pid; offset:24; size:4; signed:1;

field:int prev_prio; offset:28; size:4; signed:1;

field:long prev_state; offset:32; size:8; signed:1;

field:char next_comm[16]; offset:40; size:16; signed:1;

field:pid_t next_pid; offset:56; size:4; signed:1;

field:int next_prio; offset:60; size:4; signed:1;

print fmt: "prev_comm=%s prev_pid=%d prev_prio=%d prev_state=%s%s ==> next_comm=%s next_pid=%d next_prio=%d", REC->prev_comm, REC->prev_pid, REC->prev_prio, REC->prev_state & (2048-1) ? __print_flags(REC->prev_state & (2048-1), "|", { 1, "S"} , { 2, "D" }, { 4, "T" }, { 8, "t" }, { 16, "Z" }, { 32, "X" }, { 64, "x" }, { 128, "K" }, { 256, "W" }, { 512, "P" }, { 1024, "N" }) : "R", REC->prev_state & 2048 ? "+" : "", REC->next_comm, REC->next_pid, REC->next_prio

root@ubuntu1604:/sys/kernel/debug/tracing# echo 1 > events/sched/sched_switch/enable

root@ubuntu1604:/sys/kernel/debug/tracing# cat trace

# tracer: nop

#

# entries-in-buffer/entries-written: 703/703 #P:1

#

# _-----=> irqs-off

# / _----=> need-resched

# | / _---=> hardirq/softirq

# || / _--=> preempt-depth

# ||| / delay

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

# | | | |||| | |

bash-817 [000] d... 371.932169: sched_switch: prev_comm=bash prev_pid=817 prev_prio=120 prev_state=R ==> next_comm=kworker/u128:3 next_pid=80 next_prio=120

kworker/u128:3-80 [000] d... 371.932187: sched_switch: prev_comm=kworker/u128:3 prev_pid=80 prev_prio=120 prev_state=S ==> next_comm=sshd next_pid=790 next_prio=120

sshd-790 [000] d... 371.932226: sched_switch: prev_comm=sshd prev_pid=790 prev_prio=120 prev_state=S ==> next_comm=bash next_pid=817 next_prio=120

bash-817 [000] d... 371.932236: sched_switch: prev_comm=bash prev_pid=817 prev_prio=120 prev_state=S ==> next_comm=swapper/0 next_pid=0 next_prio=120

<idle>-0 [000] d... 371.935521: sched_switch: prev_comm=swapper/0 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=rcu_sched next_pid=7 next_prio=120

rcu_sched-7 [000] d... 371.935525: sched_switch: prev_comm=rcu_sched prev_pid=7 prev_prio=120 prev_state=S ==> next_comm=swapper/0 next_pid=0 next_prio=120

<idle>-0 [000] d... 371.939514: sched_switch: prev_comm=swapper/0 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=rcu_sched next_pid=7 next_prio=120

rcu_sched-7 [000] d... 371.939517: sched_switch: prev_comm=rcu_sched prev_pid=7 prev_prio=120 prev_state=S ==> next_comm=swapper/0 next_pid=0 next_prio=120

<idle>-0 [000] d... 371.943513: sched_switch: prev_comm=swapper/0 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=rcu_sched next_pid=7 next_prio=120

rcu_sched-7 [000] d... 371.943516: sched_switch: prev_comm=rcu_sched prev_pid=7 prev_prio=120 prev_state=S ==> next_comm=swapper/0 next_pid=0 next_prio=120

<idle>-0 [000] d... 371.947521: sched_switch: prev_comm=swapper/0 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=rcu_sched next_pid=7 next_prio=120

rcu_sched-7 [000] d... 371.947525: sched_switch: prev_comm=rcu_sched prev_pid=7 prev_prio=120 prev_state=S ==> next_comm=swapper/0 next_pid=0 next_prio=120

<idle>-0 [000] d... 371.951522: sched_switch: prev_comm=swapper/0 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=rcu_sched next_pid=7 next_prio=120

rcu_sched-7 [000] d... 371.951527: sched_switch: prev_comm=rcu_sched prev_pid=7 prev_prio=120 prev_state=S ==> next_comm=swapper/0 next_pid=0 next_prio=120

<idle>-0 [000] d... 371.955518: sched_switch: prev_comm=swapper/0 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=rcu_sched next_pid=7 next_prio=120

rcu_sched-7 [000] d... 371.955522: sched_switch: prev_comm=rcu_sched prev_pid=7 prev_prio=120 prev_state=S ==> next_comm=swapper/0 next_pid=0 next_prio=120

<idle>-0 [000] d... 371.959515: sched_switch: prev_comm=swapper/0 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=rcu_sched next_pid=7 next_prio=120

rcu_sched-7 [000] d... 371.959518: sched_switch: prev_comm=rcu_sched prev_pid=7 prev_prio=120 prev_state=S ==> next_comm=swapper/0 next_pid=0 next_prio=120

<idle>-0 [000] d... 371.963516: sched_switch: prev_comm=swapper/0 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=rcu_sched next_pid=7 next_prio=120

echo 0 > events/sched/sched_switch/enable

开启function tracer

函数调用

root@ubuntu1604:/sys/kernel/debug/tracing# echo function > current_tracer

root@ubuntu1604:/sys/kernel/debug/tracing# echo vfs_write > set_ftrace_filter

root@ubuntu1604:/sys/kernel/debug/tracing# cat trace

qemu-ga-449 [000] .... 591.951939: vfs_write <-SyS_write

qemu-ga-449 [000] .... 591.951965: vfs_write <-SyS_write

qemu-ga-449 [000] .... 591.952138: vfs_write <-SyS_write

qemu-ga-449 [000] .... 591.952222: vfs_write <-SyS_write

qemu-ga-449 [000] .... 591.952247: vfs_write <-SyS_write

qemu-ga-449 [000] .... 591.957259: vfs_write <-SyS_write

qemu-ga-449 [000] .... 591.957331: vfs_write <-SyS_write

qemu-ga-449 [000] .... 591.957356: vfs_write <-SyS_write

qemu-ga-449 [000] .... 591.957516: vfs_write <-SyS_write

rs:main Q:Reg-425 [000] .... 591.957797: vfs_write <-SyS_write

查看具体的函数路径

root@ubuntu1604:/sys/kernel/debug/tracing# echo function_graph > current_tracer

root@ubuntu1604:/sys/kernel/debug/tracing# echo > set_ftrace_filter

root@ubuntu1604:/sys/kernel/debug/tracing# echo vfs_write > set_graph_function

root@ubuntu1604:/sys/kernel/debug/tracing# cat trace

0) sshd-902 => rs:main-425

------------------------------------------

0) | vfs_write() {

0) | rw_verify_area() {

0) | security_file_permission() {

0) | apparmor_file_permission() {

0) | common_file_perm() {

0) 0.137 us | aa_file_perm();

0) 0.959 us | }

0) 1.468 us | }

0) 2.035 us | }

0) 2.605 us | }

指定函数深度

echo 2 > max_graph_depth

关闭

# echo nop > current_tracer

# echo > set_graph_function

2. CPU Sampling with perf and Flame Graphs

普通程序

编译程序:

gcc -g -fno-omit-frame-pointer -fopenmp primes.c -o primes

root@ubuntu1604:~/linux-tracing-workshop# export OMP_NUM_THREADS=16

root@ubuntu1604:~/linux-tracing-workshop# perf record -g -F 997 -- ./primes

-g表示capture stack,-F表示采用频率,会在当前目录生成perf.data。

root@ubuntu1604:~/linux-tracing-workshop# perf report --stdio

# To display the perf.data header info, please use --header/--header-only options.

#

#

# Total Lost Samples: 0

#

# Samples: 7K of event 'cpu-clock'

# Event count (approx.): 7265797196

#

# Children Self Command Shared Object Symbol

# ........ ........ ....... ................. ...............................

#

99.99% 0.00% primes primes [.] main._omp_fn.0

|

---main._omp_fn.0

|

|--99.97%-- is_prime

| |

| |--85.01%-- is_divisible

| | |

| | |--0.08%-- retint_user

| | | prepare_exit_to_usermode

| | | exit_to_usermode_loop

可以看到is_divisible花费时间很多,perf annotate可以看到更详细的情况。

root@ubuntu1604:~/linux-tracing-workshop# perf annotate

is_divisible /root/linux-tracing-workshop/primes

│

│ int is_divisible(int n, int d)

│ {

0.02 │ push %rbp

│ mov %rsp,%rbp

5.98 │ mov %edi,-0x4(%rbp)

│ mov %esi,-0x8(%rbp)

│ return n % d == 0;

│ mov -0x4(%rbp),%eax

3.51 │ cltd

2.75 │ idivl -0x8(%rbp)

71.34 │ mov %edx,%eax

│ test %eax,%eax

5.51 │ sete %al

5.82 │ movzbl %al,%eax

│ }

5.07 │ pop %rbp

│ ← retq

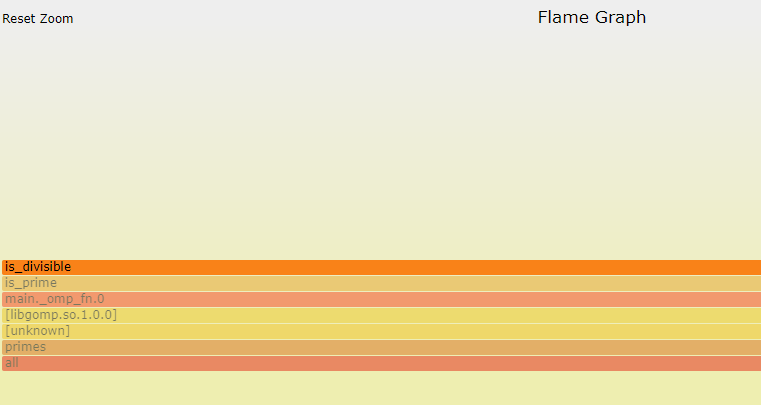

查看火焰图:

# perf script > primes.stacks

# FlameGraph/stackcollapse-perf.pl primes.stacks > primes.collapsed

# FlameGraph/flamegraph.pl primes.collapsed > primes.svg

从火焰图上可以看出确实是is_divisible这个函数用时多。

Java程序

下载安装 perf_map_agent

启动示例,先不要按Enter:

root@ubuntu1604:~/linux-tracing-workshop# java -XX:+PreserveFramePointer -XX:-Inline slowy/App

Press ENTER to start.

另一个终端:

root@ubuntu1604:~/perf-map-agent/bin# jps

20274 App

20285 Jps

root@ubuntu1604:~/perf-map-agent/bin# ./perf-java-report-stack 20274

接着在第一个终端开始,第二个终端可以看到数据,可以看到是isDivisible花了时间:

Samples: 1K of event 'cpu-clock', Event count (approx.): 11969696850

Children Self Command Shared Object Symbol

+ 100.00% 0.00% java perf-20274.map [.] call_stub

+ 100.00% 0.00% java libjvm.so [.] 0xffff801f982d987b

+ 100.00% 0.00% java libjvm.so [.] 0xffff801f982fb52e

+ 100.00% 0.00% java libjvm.so [.] 0xffff801f982fde5f

+ 100.00% 0.00% java libjli.so [.] 0xffff801f96337552

+ 100.00% 0.00% java libjli.so [.] 0xffff801f9633b3dd

+ 100.00% 0.00% java libpthread-2.23.so [.] start_thread

+ 89.79% 10.63% java perf-20274.map [.] Lslowy/App;::isPrime

+ 89.20% 89.03% java perf-20274.map [.] Lslowy/App;::isDivisible

+ 72.07% 0.00% java perf-20274.map [.] Lslowy/App;::main

+ 17.13% 0.00% java perf-20274.map [.] Lslowy/App;::main

+ 10.80% 0.08% java perf-20274.map [.] Interpreter

+ 0.08% 0.00% java libjvm.so [.] 0xffff801f97f82ab0

+ 0.08% 0.00% java [kernel.kallsyms] [k] schedule

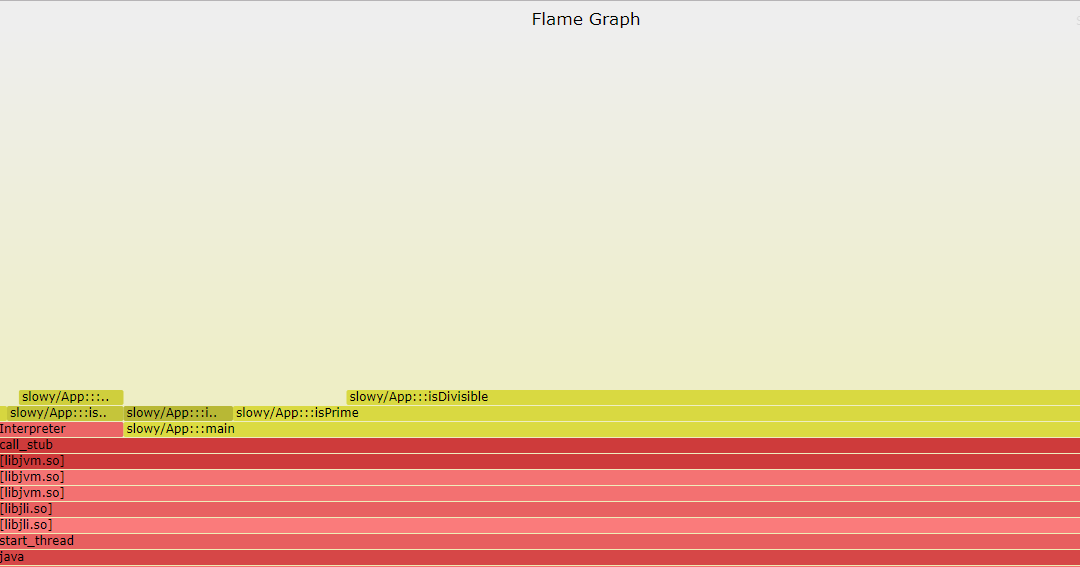

生成火焰图:

root@ubuntu1604:~/perf-map-agent/bin# cd /tmp/

root@ubuntu1604:/tmp# ls

hsperfdata_root java.svg perf-18877.data.old perf-20210.data perf-20210.map perf-20274.data perf-20274.map perf.data perf-vdso.so-4k55kH perf-vdso.so-HGHJGC

root@ubuntu1604:/tmp# mv perf-20274.data perf.data

root@ubuntu1604:/tmp# perf script | ~/FlameGraph/stackcollapse-perf.pl | ~/FlameGraph/flamegraph.pl --colors=java > java.svg

Node程序

Node程序需要带–perf_basic_prof启动。进入nodey启动run.sh

root@ubuntu1604:~/linux-tracing-workshop/nodey# ./run.sh perf

另一个终端开启perf

root@ubuntu1604:~/perf-map-agent/bin# perf record -F 97 -p $(pgrep -n node) -g

第一个终端开始测试

root@ubuntu1604:~/linux-tracing-workshop/nodey# ab -c 10 -n 100 -m POST 'http://localhost:3000/users/auth?username=foo&password=wrong'

终止可以查看数据

root@ubuntu1604:~/perf-map-agent/bin# perf report -i perf.data

当然,也可以生成火焰图

root@ubuntu1604:~/perf-map-agent/bin# perf script -i perf.data | ~/FlameGraph/stackcollapse-perf.pl | ~/FlameGraph/flamegraph.pl > node.svg

3. Using BPF Tools: Broken File Opens

这个实验会使用BCC Tool对程序启动过程的错误进行排查。

首先,编译有问题的程序:

gcc -g -fno-omit-frame-pointer -O0 server.c -o server

运行server,虽然现实启动成功,但是它并没有初始化成功。看起来程序是卡住了, 使用top可以发现server的cpu消耗还是挺多的. 查看server程序用户态和内核态的cpu利用率:

root@ubuntu1604:~# pidstat -u -p $(pidof server) 1

Linux 4.4.0-21-generic (ubuntu1604) 12/04/2017 _x86_64_ (1 CPU)

12:46:39 PM UID PID %usr %system %guest %CPU CPU Command

12:46:40 PM 0 29947 1.15 11.49 0.00 12.64 0 server

12:46:41 PM 0 29947 1.18 11.76 0.00 12.94 0 server

12:46:42 PM 0 29947 5.62 6.74 0.00 12.36 0 server

12:46:43 PM 0 29947 2.30 10.34 0.00 12.64 0 server

12:46:44 PM 0 29947 1.11 11.11 0.00 12.22 0 server

12:46:45 PM 0 29947 2.27 9.09 0.00 11.36 0 server

q12:46:46 PM 0 29947 3.53 10.59 0.00 14.12 0 server

12:46:47 PM 0 29947 1.16 11.63 0.00 12.79 0 server

可以看到,server在内核态的时候比较多。使用syscount查看,可以看到,server调用nanosleep和open这两个syscall比较频繁。

root@ubuntu1604:~/perf-tools/bin# ./syscount -cp $(pidof server)

Tracing PID 29947... Ctrl-C to end.

^CSYSCALL COUNT

nanosleep 202027

open 202054

root@ubuntu1604:~/perf-tools/bin#

opensnoop可以查看进程issue的open请求:

root@ubuntu1604:~# opensnoop -p $(pidof server) -d 0.01

Tracing open()s issued by PID 910 for 0.01 seconds (buffered)...

COMM PID FD FILE

server 910 -1 /etc/tracing-server-example.conf

server 910 -1 /etc/tracing-server-example.conf

server 910 -1 /etc/tracing-server-example.conf

server 910 -1 /etc/tracing-server-example.conf

server 910 -1 /etc/tracing-server-example.conf

server 910 -1 /etc/tracing-server-example.conf

server 910 -1 /etc/tracing-server-example.conf

server 910 -1 /etc/tracing-server-example.conf

server 910 -1 /etc/tracing-server-example.conf

这样问题的基本原因就大概清楚了,server试图访问/etc/tracing-server-example.conf文件,但是该文件并不存在,所以没有初始化成功。

我们也可以用argdist来查看函数或者系统调用的参数。比如我们查看nanosleep的参数,可以发现大多数都是在512~1023之间,server中的nanosleep是1000。

root@ubuntu1604:~/bcc/tools# ./argdist.py -p $(pidof server) -H 'p::SyS_nanosleep(struct timespec *time):u64:time->tv_nsec'

[14:09:10]

time->tv_nsec : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 15864 |****************************************|

[14:09:11]

time->tv_nsec : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 15890 |****************************************|

[14:09:12]

类似的,我们查看open的参数和返回值

root@ubuntu1604:~/bcc/tools# ./argdist.py -p $(pidof server) -C 'p:c:open(char *filename):char*:filename'

[14:13:04]

p:c:open(char *filename):char*:filename

COUNT EVENT

14626 filename = /etc/tracing-server-example.conf

[14:13:05]

p:c:open(char *filename):char*:filename

COUNT EVENT

14606 filename = /etc/tracing-server-example.conf

[14:13:06]

p:c:open(char *filename):char*:filename

COUNT EVENT

14482 filename = /etc/tracing-server-example.conf

[14:13:07]

p:c:open(char *filename):char*:filename

COUNT EVENT

14400 filename = /etc/tracing-server-example.conf

^Croot@ubuntu1604:~/bcc/tools# ./argdist.py -p $(pidof server) -C 'r:c:open():int:$retval'

[14:13:28]

r:c:open():int:$retval

COUNT EVENT

14451 $retval = -1

[14:13:29]

r:c:open():int:$retval

COUNT EVENT

14441 $retval = -1

^Croot@ubuntu1604:~/bcc/tools#

4. Using BPF Tools: Slow File I/O

这个实验会跟踪IO latency比较大的情况。

首先编译并允许实验程序。

root@ubuntu1604:~/linux-tracing-workshop# gcc -g -fno-omit-frame-pointer -O0 -pthread logger.c -o logger

root@ubuntu1604:~/linux-tracing-workshop# ./logger

假设你知道了logger程序的延迟会偶尔比较大(所以让你来解决这个问题:))。先怀疑是因为存在slow IO操作导致延迟大。使用biolatency看看:

root@ubuntu1604:/usr/share/bcc/tools# ./biolatency 1

Tracing block device I/O... Hit Ctrl-C to end.

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 24 |********* |

1024 -> 2047 : 98 |****************************************|

2048 -> 4095 : 1 | |

4096 -> 8191 : 1 | |

8192 -> 16383 : 5 |** |

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 26 |********** |

1024 -> 2047 : 102 |****************************************|

2048 -> 4095 : 1 | |

4096 -> 8191 : 0 | |

8192 -> 16383 : 4 |* |

^C

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 20 |******** |

1024 -> 2047 : 93 |****************************************|

2048 -> 4095 : 8 |*** |

4096 -> 8191 : 1 | |

8192 -> 16383 : 5 |** |

root@ubuntu1604:/usr/share/bcc/tools#

从上面可以看到大部分的IO操作都很快,但是也有一些IO操作比较耗时。使用biosnoop看看,可以看到有一些logger的IO操作明显比较大。

root@ubuntu1604:/usr/share/bcc/tools# ./biosnoop

TIME(s) COMM PID DISK T SECTOR BYTES LAT(ms)

0.000000000 logger 2120 vda W 3230184 4096 1.49

0.001188000 jbd2/vda1-8 173 vda W 1611120 8192 1.15

0.002201000 jbd2/vda1-8 173 vda W 1611136 4096 0.90

0.023616000 logger 2120 vda W 3230192 4096 1.22

0.024938000 jbd2/vda1-8 173 vda W 1611144 8192 1.29

0.026173000 jbd2/vda1-8 173 vda W 1611160 4096 1.13

0.047631000 logger 2120 vda W 3230192 4096 1.23

0.048968000 jbd2/vda1-8 173 vda W 1611168 8192 1.30

0.050024000 jbd2/vda1-8 173 vda W 1611184 4096 0.95

0.071440000 logger 2120 vda W 3230192 4096 1.20

0.072585000 jbd2/vda1-8 173 vda W 1611192 8192 1.11

0.073800000 jbd2/vda1-8 173 vda W 1611208 4096 1.09

0.090548000 logger 2121 vda W 3217408 1048576 9.84

0.091801000 jbd2/vda1-8 173 vda W 1611216 8192 1.16

0.093033000 jbd2/vda1-8 173 vda W 1611232 4096 1.13

单独看看logger进程,可以发现logger偶尔会有很慢的IO操作:

^Croot@ubuntu1604:/usr/share/bcc/tools# ./biosnoop -p $(pidof logger)

TIME(s) COMM PID DISK T SECTOR BYTES LAT(ms)

0.000000000 logger 2120 vda W 3235144 4096 1.15

0.001174000 jbd2/vda1-8 173 vda W 1609744 8192 1.10

0.002295000 jbd2/vda1-8 173 vda W 1609760 4096 1.01

0.023569000 logger 2120 vda W 3235144 4096 1.06

0.024656000 jbd2/vda1-8 173 vda W 1609768 8192 1.05

0.025822000 jbd2/vda1-8 173 vda W 1609784 4096 1.07

0.037940000 logger 2121 vda W 3217408 1048576 9.31

0.039198000 jbd2/vda1-8 173 vda W 1609792 8192 1.16

0.040218000 jbd2/vda1-8 173 vda W 1609808 4096 0.92

使用fileslower,可以看出来,logger延迟比较大的原因是写1M数据到log.data,超过了普通的1024字节。

root@ubuntu1604:~/bcc/tools# ./fileslower.py 1

Tracing sync read/writes slower than 1 ms

TIME(s) COMM TID D BYTES LAT(ms) FILENAME

0.027 logger 1182 W 1024 3.79 log.data

0.055 logger 1182 W 1024 8.35 log.data

0.079 logger 1182 W 1024 3.62 log.data

0.103 logger 1182 W 1024 3.81 log.data

0.126 logger 1182 W 1024 3.71 log.data

0.150 logger 1182 W 1024 3.67 log.data

0.174 logger 1182 W 1024 3.68 log.data

0.198 logger 1182 W 1024 3.97 log.data

0.219 logger 1183 W 1048576 13.95 flush.data

0.222 logger 1182 W 1024 3.78 log.data

0.245 logger 1182 W 1024 3.54 log.data

0.269 logger 1182 W 1024 3.55 log.data

0.293 logger 1182 W 1024 3.76 log.data

0.317 logger 1182 W 1024 3.89 log.data

0.341 logger 1182 W 1024 3.85 log.data

0.364 logger 1182 W 1024 3.49 log.data

0.389 logger 1182 W 1024 4.96 log.data

0.413 logger 1182 W 1024 3.90 log.data

0.431 logger 1183 W 1048576 12.00 flush.data

5.Using BPF Tools: Chasing a Memory Leak

该实验中,用BPF的工具memleak检查一个程序的内存泄漏。

编译程序之后运行,输入文件名,统计,使用htop可以看到wordcount的内存使用一直在增加。

g++ -fno-omit-frame-pointer -std=c++11 -g wordcount.cc -o wordcount

使用memleak可以是否有内存泄漏。

root@ubuntu1604:/usr/share/bcc/tools/old# ./memleak -p $(pidof wordcount)

memleak默认每隔5s会打印出已经分配了,但是没有free掉的内存。

[18:15:21] Top 10 stacks with outstanding allocations: [18:15:36] Top 10 stacks with outstanding allocations: 34 bytes in 2 allocations from stack [unknown]

35 bytes in 2 allocations from stack

[unknown]

[unknown]

64 bytes in 2 allocations from stack

std::allocator_traits<std::allocator<std::_Sp_counted_ptr_inplace<input_reader, std::allocator<input_reader>, (__gnu_cxx::_Lock_policy)2> > >::allocate(std::allocator<std::_Sp_counted_ptr_inplace<input_reader, std::allocator<input_reader>, (__gnu_cxx::_Lock_policy)2> >&, unsigned long)+0x28

std::__allocated_ptr<std::allocator<std::_Sp_counted_ptr_inplace<input_reader, std::allocator<input_reader>, (__gnu_cxx::_Lock_policy)2> > > std::__allocate_guarded<std::allocator<std::_Sp_counted_ptr_inplace<input_reader, std::allocator<input_reader>, (__gnu_cxx::_Lock_policy)2> > >(std::allocator<std::_Sp_counted_ptr_inplace<input_reader, std::allocator<input_reader>, (__gnu_cxx::_Lock_policy)2> >&)+0x21

std::__shared_count<(__gnu_cxx::_Lock_policy)2>::__shared_count<input_reader, std::allocator<input_reader>>(std::_Sp_make_shared_tag, input_reader*, std::allocator<input_reader> const&)+0x59

std::__shared_ptr<input_reader, (__gnu_cxx::_Lock_policy)2>::__shared_ptr<std::allocator<input_reader>>(std::_Sp_make_shared_tag, std::allocator<input_reader> const&)+0x3c

std::shared_ptr<input_reader>::shared_ptr<std::allocator<input_reader>>(std::_Sp_make_shared_tag, std::allocator<input_reader> const&)+0x28

std::shared_ptr<input_reader> std::allocate_shared<input_reader, std::allocator<input_reader>>(std::allocator<input_reader> const&)+0x37

std::shared_ptr<input_reader> std::make_shared<input_reader>()+0x3b

main+0x3b

__libc_start_main+0xf0

128 bytes in 2 allocations from stack

std::allocator_traits<std::allocator<std::_Sp_counted_ptr_inplace<word_counter, std::allocator<word_counter>, (__gnu_cxx::_Lock_policy)2> > >::allocate(std::allocator<std::_Sp_counted_ptr_inplace<word_counter, std::allocator<word_counter>, (__gnu_cxx::_Lock_policy)2> >&, unsigned long)+0x28

std::__allocated_ptr<std::allocator<std::_Sp_counted_ptr_inplace<word_counter, std::allocator<word_counter>, (__gnu_cxx::_Lock_policy)2> > > std::__allocate_guarded<std::allocator<std::_Sp_counted_ptr_inplace<word_counter, std::allocator<word_counter>, (__gnu_cxx::_Lock_policy)2> > >(std::allocator<std::_Sp_counted_ptr_inplace<word_counter, std::allocator<word_counter>, (__gnu_cxx::_Lock_policy)2> >&)+0x21

std::__shared_count<(__gnu_cxx::_Lock_policy)2>::__shared_count<word_counter, std::allocator<word_counter>, std::shared_ptr<input_reader>&>(std::_Sp_make_shared_tag, word_counter*, std::allocator<word_counter> const&, std::shared_ptr<input_reader>&)+0x5f

std::__shared_ptr<word_counter, (__gnu_cxx::_Lock_policy)2>::__shared_ptr<std::allocator<word_counter>, std::shared_ptr<input_reader>&>(std::_Sp_make_shared_tag, std::allocator<word_counter> const&, std::shared_ptr<input_reader>&)+0x50

std::shared_ptr<word_counter>::shared_ptr<std::allocator<word_counter>, std::shared_ptr<input_reader>&>(std::_Sp_make_shared_tag, std::allocator<word_counter> const&, std::shared_ptr<input_reader>&)+0x3c

std::shared_ptr<word_counter> std::allocate_shared<word_counter, std::allocator<word_counter>, std::shared_ptr<input_reader>&>(std::allocator<word_counter> const&, std::shared_ptr<input_reader>&)+0x4b

_ZSt11make_sharedI12word_counterIRSt10shared_ptrI12input_readerEEES1_IT_EDpOT0_+0x51

main+0x4e

__libc_start_main+0xf0

1767 bytes in 97 allocations from stack

???

4194304 bytes in 1 allocations from stack

std::allocator_traits<std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >::allocate(std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > >&, unsigned long)+0x28

std::_Vector_base<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >::_M_allocate(unsigned long)+0x2a

void std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >::_M_emplace_back_aux<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&>(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&)+0x3e

std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > >::push_back(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&)+0x69

std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > >::operator=(std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&)+0x26

std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > std::__copy_move<false, false, std::input_iterator_tag>::__copy_m<std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > >(std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > >)+0x52

std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > std::__copy_move_a<false, std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > >(std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > >)+0x7d

std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > std::__copy_move_a2<false, std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > >(std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > >)+0xb6

std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > std::copy<std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > > >(std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::istream_iterator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, char, std::char_traits<char>, long>, std::back_insert_iterator<std::vector<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >, std::allocator<std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > > > >)+0xa8

word_counter::word_count[abi:cxx11]()+0xfc

我们看到最大的一块没有释放的内存是在word_counter::word_count函数调用copy时候,还有一个是main中的std::shared_ptr指向word_counter的指针也没有释放。这就很奇怪了,因为shared_ptr会在对象退出scope时候自动析构。这个时候看代码:

int main()

{

bool done = false;

while (!done)

{

auto reader = std::make_shared<input_reader>();

auto counter = std::make_shared<word_counter>(reader);

reader->set_counter(counter);

auto counts = counter->word_count();

done = counter->done();

for (auto const& wc : counts)

{

std::cout << wc.first << " " << wc.second << '\n';

}

}

return 0;

}

原因就明了了,reader和counter发生了循环引用, 这样input_reader和word_counter的析构函数都是没有办法自动执行的。

6. Using BPF Tools: Database and Disk Stats and Stacks

在这个实验中将会使用BCC的tool去观察磁盘应能以及数据的query性能。

观察mysql的插入

首先安装, 启动 mysql:

root@ubuntu1604:~/linux-tracing-workshop# apt install mysql-server

root@ubuntu1604:~/linux-tracing-workshop# systemctl start mysql

创建好一个database之后运行:

root@ubuntu1604:~/linux-tracing-workshop# ./data_access.py insert

上述脚本会一直插入记录。

运行biotop会发现mysql一直比较忙:

root@ubuntu1604:/usr/share/bcc/tools# ./biotop

10:13:46 loadavg: 1.56 0.62 0.34 1/141 7738

PID COMM D MAJ MIN DISK I/O Kbytes AVGms

173 jbd2/vda1-8 W 253 0 vda 30 180 1.15

7007 mysqld W 253 0 vda 13 52 1.13

6985 mysqld W 253 0 vda 1 16 4.84

6989 mysqld W 253 0 vda 1 16 1.39

查看单个io的具体细节:

root@ubuntu1604:/usr/share/bcc/tools# ./biosnoop

TIME(s) COMM PID DISK T SECTOR BYTES LAT(ms)

0.000000000 mysqld 7007 vda W 11881528 4096 1.07

0.001324000 jbd2/vda1-8 173 vda W 1594504 8192 1.29

0.003013000 jbd2/vda1-8 173 vda W 1594520 4096 1.59

0.004724000 mysqld 7007 vda W 11881528 4096 1.06

0.005880000 jbd2/vda1-8 173 vda W 1594528 8192 1.12

0.007384000 jbd2/vda1-8 173 vda W 1594544 4096 1.40

0.009750000 mysqld 7007 vda W 11881528 4096 1.75

0.011242000 jbd2/vda1-8 173 vda W 1594552 8192 1.46

0.012463000 jbd2/vda1-8 173 vda W 1594568 4096 1.09

0.014872000 mysqld 7007 vda W 11881528 4096 1.79

0.015912000 jbd2/vda1-8 173 vda W 1594576 8192 1.01

0.017167000 jbd2/vda1-8 173 vda W 1594592 4096 1.14

0.019677000 mysqld 7007 vda W 11881528 4096 1.84

0.021173000 jbd2/vda1-8 173 vda W 1594600 8192 1.46

0.022514000 jbd2/vda1-8 173 vda W 1594616 4096 1.24

0.024218000 mysqld 7007 vda W 11881528 4096 1.07

0.025966000 jbd2/vda1-8 173 vda W 1594624 8192 1.72

0.027062000 jbd2/vda1-8 173 vda W 1594640 4096 1.00

0.028742000 mysqld 7007 vda W 11881528 4096 1.02

0.029930000 jbd2/vda1-8 173 vda W 1594648 8192 1.15

0.031019000 jbd2/vda1-8 173 vda W 1594664 4096 0.98

0.032752000 mysqld 7007 vda W 11881528 4096 1.06

0.033856000 jbd2/vda1-8 173 vda W 1594672 8192 1.07

0.035294000 jbd2/vda1-8 173 vda W 1594688 4096 1.33

使用biolatency看看延迟分布:

root@ubuntu1604:/usr/share/bcc/tools# ./biolatency 5

Tracing block device I/O... Hit Ctrl-C to end.

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 748 |************ |

1024 -> 2047 : 2425 |****************************************|

2048 -> 4095 : 74 |* |

4096 -> 8191 : 27 | |

8192 -> 16383 : 11 | |

16384 -> 32767 : 2 | |

32768 -> 65535 : 2 | |

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 773 |************* |

1024 -> 2047 : 2370 |****************************************|

2048 -> 4095 : 78 |* |

4096 -> 8191 : 34 | |

8192 -> 16383 : 11 | |

16384 -> 32767 : 4 | |

32768 -> 65535 : 1 | |

我们看到有一些IO延迟确实比较大,看一下提交给IO的内核栈(我的ubuntu 1604运行错误,可能跟内核编译选型有关):

stackcount -i 10 submit_bio

使用fileslower查看比较慢的操作:

root@ubuntu1604:/usr/share/bcc/tools# ./fileslower 1

Tracing sync read/writes slower than 1 ms

TIME(s) COMM TID D BYTES LAT(ms) FILENAME

5.901 rs:main Q:Reg 459 W 92 1.80 auth.log

6.479 mysqld 7007 W 1024 1.48 ib_logfile0

7.952 mysqld 7007 W 512 2.72 ib_logfile0

14.828 mysqld 7007 W 512 5.28 ib_logfile0

14.843 mysqld 7007 W 8704 2.41 ib_logfile0

19.573 mysqld 7007 W 512 2.84 ib_logfile0

36.177 mysqld 7007 W 512 2.98 ib_logfile0

44.049 mysqld 7007 W 512 1.32 ib_logfile0

45.975 mysqld 7007 W 512 2.69 ib_logfile0

45.992 mysqld 7007 W 512 5.47 ib_logfile0

63.595 mysqld 7007 W 512 1.29 ib_logfile0

使用filetop查看mysql在插入row时候的文件访问

^Croot@ubuntu1604:/usr/share/bcc/tools# ./filetop

Tracing... Output every 1 secs. Hit Ctrl-C to end

10:27:08 loadavg: 1.78 1.61 1.12 1/139 7844

TID COMM READS WRITES R_Kb W_Kb T FILE

7844 clear 2 0 8 0 R xterm

7843 filetop 2 0 2 0 R loadavg

7844 clear 1 0 0 0 R libtinfo.so.5.9

7844 clear 1 0 0 0 R libc-2.23.so

7844 filetop 3 0 0 0 R clear

7844 filetop 2 0 0 0 R ld-2.23.so

7843 filetop 1 0 0 0 R id

7007 mysqld 0 1 0 16 R employees.ibd

7007 mysqld 0 239 0 166 R ib_logfile0

10:27:09 loadavg: 1.78 1.61 1.12 1/139 7845

TID COMM READS WRITES R_Kb W_Kb T FILE

7845 clear 2 0 8 0 R xterm

7843 filetop 2 0 2 0 R loadavg

7845 clear 1 0 0 0 R libtinfo.so.5.9

7845 clear 1 0 0 0 R libc-2.23.so

7845 filetop 3 0 0 0 R clear

7845 filetop 2 0 0 0 R ld-2.23.so

7007 mysqld 0 1 0 16 R employees.ibd

7007 mysqld 0 243 0 168 R ib_logfile0

查看IO操作的中断对系统的影响:

root@ubuntu1604:/usr/share/bcc/tools# ./hardirqs 1

Tracing hard irq event time... Hit Ctrl-C to end.

HARDIRQ TOTAL_usecs

virtio1-input.0 161

virtio3-req.0 5684

HARDIRQ TOTAL_usecs

virtio0-input.0 2

virtio1-input.0 108

virtio3-req.0 5639

HARDIRQ TOTAL_usecs

virtio1-input.0 145

virtio3-req.0 5678

HARDIRQ TOTAL_usecs

virtio0-input.0 1

virtio1-input.0 134

virtio3-req.0 5891

HARDIRQ TOTAL_usecs

virtio1-input.0 116

virtio3-req.0 5869

现在观察mysql的查询

插入数据并select:

root@ubuntu1604:~/linux-tracing-workshop# ./data_access.py insert_once

Inserting employees...

root@ubuntu1604:~/linux-tracing-workshop# ./data_access.py select

Selecting employees...

使用biotop,biolatency, fileslower均没有看到有慢的IO操作,猜测是cache命中得比较好。

运行cachestat之后运行select:

root@ubuntu1604:/usr/share/bcc/tools# ./cachestat 1

HITS MISSES DIRTIES READ_HIT% WRITE_HIT% BUFFERS_MB CACHED_MB

0 0 0 0.0% 0.0% 64 1531

0 0 0 0.0% 0.0% 64 1531

0 0 0 0.0% 0.0% 64 1531

1124 0 0 100.0% 0.0% 64 1531

5 2 3 28.6% 0.0% 64 1531

0 0 0 0.0% 0.0% 64 1531

0 0 0 0.0% 0.0% 64 1531

0 0 0 0.0% 0.0% 64 1531

0 0 0 0.0% 0.0% 64 1531

可以看到在某一段时间有读操作,但是之后就没有,可以猜想mysql在内部实现了自己的cache。

关闭系统cache,可以看到确实也没有发生很多read操作。

root@ubuntu1604:/usr/share/bcc/tools# echo 1 > /proc/sys/vm/drop_caches

root@ubuntu1604:/usr/share/bcc/tools# ./cachestat 1

HITS MISSES DIRTIES READ_HIT% WRITE_HIT% BUFFERS_MB CACHED_MB

0 0 0 0.0% 0.0% 0 88

4 0 0 100.0% 0.0% 0 88

6 5 4 18.2% 18.2% 0 88

0 0 0 0.0% 0.0% 0 88

0 0 0 0.0% 0.0% 0 88

3 0 0 100.0% 0.0% 0 88

0 0 0 0.0% 0.0% 0 88

0 0 0 0.0% 0.0% 0 88

0 0 0 0.0% 0.0% 0 88

7. Using BPF Tools: Node and JVM USDT Probes

Node

编译支持USDT的node, 之前要先按照systemtap-sdt-dev:

$ git clone https://github.com/nodejs/node.git

$ cd node

$ git checkout v6.2.1 # or whatever version is currently stable

$ ./configure --prefix=/opt/node --with-dtrace

$ make -j 3

$ sudo make install

使用tplist显示node的USDT probe点:

root@ubuntu1604:/usr/share/bcc/tools# ./tplist -l ~/node/node

/root/node/node node:gc__start

/root/node/node node:gc__done

/root/node/node node:net__server__connection

/root/node/node node:net__stream__end

/root/node/node node:http__server__response

/root/node/node node:http__client__response

/root/node/node node:http__client__request

/root/node/node node:http__server__request

将node运行起来可以查看更多的USDT probe:

root@ubuntu1604:/usr/share/bcc/tools# ./tplist -p $(pidof node)

/lib/x86_64-linux-gnu/libc-2.23.so libc:setjmp

/lib/x86_64-linux-gnu/libc-2.23.so libc:longjmp

/lib/x86_64-linux-gnu/libc-2.23.so libc:longjmp_target

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_heap_new

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_arena_reuse_free_list

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_sbrk_less

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_arena_reuse_wait

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_arena_reuse

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_arena_new

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_arena_retry

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_heap_free

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_heap_less

/lib/x86_64-linux-gnu/libc-2.23.so libc:memory_heap_more

查看probe的详细信息:

root@ubuntu1604:/usr/share/bcc/tools# ./tplist -l ~/node/node -vv '*server__request'

/root/node/node node:http__server__request [sema 0x1c0a034]

location #1 0x1045cf8

argument #1 8 unsigned bytes @ r14

argument #2 8 unsigned bytes @ ax

argument #3 8 unsigned bytes @ *(bp - 4344)

argument #4 4 signed bytes @ *(bp - 4348)

argument #5 8 unsigned bytes @ *(bp - 4304)

argument #6 8 unsigned bytes @ *(bp - 4312)

argument #7 4 signed bytes @ *(bp - 4352)

在node/src/node.stp文件中有每个参数的含义:

probe node_http_server_request = process("node").mark("http__server__request")

{

remote = user_string($arg3);

port = $arg4;

method = user_string($arg5);

url = user_string($arg6);

fd = $arg7;

probestr = sprintf("%s(remote=%s, port=%d, method=%s, url=%s, fd=%d)",

$$name,

remote,

port,

method,

url,

fd);

}

开启server.js:

root@ubuntu1604:~/linux-tracing-workshop# ~/node/node server.js

另一个终端开启trace:

root@ubuntu1604:~/bcc/tools# ./trace.py -p $(pidof node) 'u:/opt/node/node:http__server__request "%s %s", arg5, arg6'

另一个终端发送请求,arg5分别表示method和url:

root@ubuntu1604:~/node/src# curl localhost:8080

Hello, world!root@ubuntu1604:~/node/src# curl localhost:8080/index.html

Hello, world!root@ubuntu1604:~/node/src# curl 'localhost:8080/login?user=dave&pwd=123'

Hello, world!root@ubuntu1604:~/node/src#

从第二个终端可以看到输出:

root@ubuntu1604:~/bcc/tools# ./trace.py -p $(pidof node) 'u:/opt/node/node:http__server__request "%s %s", arg5, arg6'

PID TID COMM FUNC -

25022 25022 node http__server__request GET /

25022 25022 node http__server__request GET /index.html

25022 25022 node http__server__request GET /login?user=dave&pwd=123

JVM

下载tapset

查看USDT probe:

root@ubuntu1604:~/systemtap-tapset-openjdk9# ./create-tapset.sh /usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/

root@ubuntu1604:~/systemtap-tapset-openjdk9/systemtap-tapset# grep -A 10 'probe.*class_loaded' *.stp

hotspot-1.9.0.stp:probe hotspot.class_loaded =

hotspot-1.9.0.stp- process("/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/").mark("class__loaded"),

hotspot-1.9.0.stp- process("/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/").mark("class__loaded")

hotspot-1.9.0.stp-{

hotspot-1.9.0.stp- name = "class_loaded";

hotspot-1.9.0.stp- class = user_string_n($arg1, $arg2);

hotspot-1.9.0.stp- classloader_id = $arg3;

hotspot-1.9.0.stp- is_shared = $arg4;

hotspot-1.9.0.stp- probestr = sprintf("%s(class='%s',classloader_id=0x%x,is_shared=%d)",

hotspot-1.9.0.stp- name, class, classloader_id, is_shared);

hotspot-1.9.0.stp-}

启动slowy/App

root@ubuntu1604:~/linux-tracing-workshop# /usr/lib/jvm/java-9-openjdk-amd64/bin/java slowy/App

查看进程probe点:

root@ubuntu1604:~/bcc/tools# /usr/lib/jvm/java-9-openjdk-amd64/bin/jps

33757 sun.tools.jps.Jps

33727 App

root@ubuntu1604:~/bcc/tools# ./tplist.py -p 33727 '*class*loaded'

/usr/lib/jvm/java-9-openjdk-amd64/lib/amd64/server/libjvm.so hotspot:class__loaded

/usr/lib/jvm/java-9-openjdk-amd64/lib/amd64/server/libjvm.so hotspot:class__unloaded

root@ubuntu1604:~/bcc/tools#

开始跟踪:

root@ubuntu1604:/usr/share/bcc/tools# ./trace -p 33727 'u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:class__loaded "%s", arg1'

关闭slowy/App, 可以看到trace到了加载的类

root@ubuntu1604:/usr/share/bcc/tools# ./trace -p 33727 'u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:class__loaded "%s", arg1'

PID TID COMM FUNC -

33727 33728 java class__loaded java/lang/Shutdown

33727 33728 java class__loaded java/lang/Shutdown$Lock

接下来trace一下参数:

root@ubuntu1604:~/systemtap-tapset-openjdk9/systemtap-tapset# grep -A 10 'probe.*method_entry' *.stp

hotspot-1.9.0.stp:probe hotspot.method_entry =

hotspot-1.9.0.stp- process("/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/").mark("method__entry"),

hotspot-1.9.0.stp- process("/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/").mark("method__entry")

hotspot-1.9.0.stp-{

hotspot-1.9.0.stp- name = "method_entry";

hotspot-1.9.0.stp- thread_id = $arg1;

hotspot-1.9.0.stp- class = user_string_n($arg2, $arg3);

hotspot-1.9.0.stp- method = user_string_n($arg4, $arg5);

hotspot-1.9.0.stp- sig = user_string_n($arg6, $arg7);

hotspot-1.9.0.stp- probestr = sprintf("%s(thread_id=%d,class='%s',method='%s',sig='%s')",

hotspot-1.9.0.stp- name, thread_id, class, method, sig);

root@ubuntu1604:~/systemtap-tapset-openjdk9/systemtap-tapset# grep -A 10 'probe.*method_return' *.stp

hotspot-1.9.0.stp:probe hotspot.method_return =

hotspot-1.9.0.stp- process("/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/").mark("method__return"),

hotspot-1.9.0.stp- process("/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/").mark("method__return")

hotspot-1.9.0.stp-{

hotspot-1.9.0.stp- name = "method_return";

hotspot-1.9.0.stp- thread_id = $arg1;

hotspot-1.9.0.stp- class = user_string_n($arg2, $arg3);

hotspot-1.9.0.stp- method = user_string_n($arg4, $arg5);

hotspot-1.9.0.stp- sig = user_string_n($arg6, $arg7);

hotspot-1.9.0.stp- probestr = sprintf("%s(thread_id=%d,class='%s',method='%s',sig='%s')",

hotspot-1.9.0.stp- name, thread_id, class, method, sig);

可以看到参数2和4分别表示class和method。

root@ubuntu1604:/usr/share/bcc/tools# ./argdist -p 33840 -C 'u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4' -T 5

[19:09:19]

u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4

COUNT EVENT

[19:09:20]

u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4

COUNT EVENT

[19:09:21]

u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4

COUNT EVENT

[19:09:22]

u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4

COUNT EVENT

1 arg4 = getBufIfOpen

4516 arg4 = isPrime

4516 arg4 = isSimplePrime

891794 arg4 = isDivisible

[19:09:23]

u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4

COUNT EVENT

2309 arg4 = isPrime

2309 arg4 = isSimplePrime

1036648 arg4 = isDivisible

[19:09:24]

u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4

COUNT EVENT

1768 arg4 = isPrime

1768 arg4 = isSimplePrime

1039152 arg4 = isDivisible

[19:09:25]

u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4

COUNT EVENT

1467 arg4 = isPrime

1467 arg4 = isSimplePrime

1038429 arg4 = isDivisible

[19:09:26]

u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry():char*:arg4

COUNT EVENT

1325 arg4 = isPrime

1325 arg4 = isSimplePrime

1038159 arg4 = isDivisible

可以看到大部分时间都在调用isDivisible。

启动slowy/App:

root@ubuntu1604:~/linux-tracing-workshop# /usr/lib/jvm/java-9-openjdk-amd64/bin/java -XX:-Inline -XX:+ExtendedDTraceProbes slowy/App

跟踪函数的entry和return:

root@ubuntu1604:/usr/share/bcc/tools# ./trace -p 33918 'u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__entry "%s.%s", arg2, arg4' 'u:/usr/lib/jvm/java-9-openjdk-amd64/jre/lib/amd64/server/libjvm.so:method__return "%s.%s", arg2, arg4'

33918 33919 java method__entry slowy/App.isDivisible

33918 33919 java method__entry slowy/App.isDivisible

33918 33919 java method__entry slowy/App.isPrime

33918 33919 java method__entry slowy/App.isSimplePrime

33918 33919 java method__entry slowy/App.isDivisible

33918 33919 java method__entry slowy/App.isPrime

33918 33919 java method__entry slowy/App.isSimplePrime

33918 33919 java method__entry slowy/App.isDivisible

可以看到有大量的函数entry和return(未来得及显示)。

blog comments powered by Disqus